reverse engineering granola for obsidian export

granola <> obsidian

for those unaware, granola is a tool that can be used to generate summary notes about a meeting

one unfortunate thing though is that i am already quite loyal to obsidian as the designated place where i store notes, so i wanted to see if there was a way to port my granola notes there.

intercepting granola's traffic

first thing i learned is that granola notes are not stored locally, but instead in their own cloud. this meant that getting my notes out of granola was a bit less straightforward, and that i needed to do more poking and prodding.

i decided to use mitmproxy to set up a local proxy to intercept & inspect requests.

brew install --cask mitmproxy

after installing, i ran mitmproxy to generate a root certificate. mitmproxy performs a man-in-the-middle interception of HTTPS traffic by generating fake TLS certificates for each site you visit.

by default, your system and browser will not trust mitmproxy's certificate, so we'll have to manually trust it by running this command:

sudo security add-trusted-cert -d -r trustRoot \

-k /Library/Keychains/System.keychain \

~/.mitmproxy/mitmproxy-ca-cert.pem

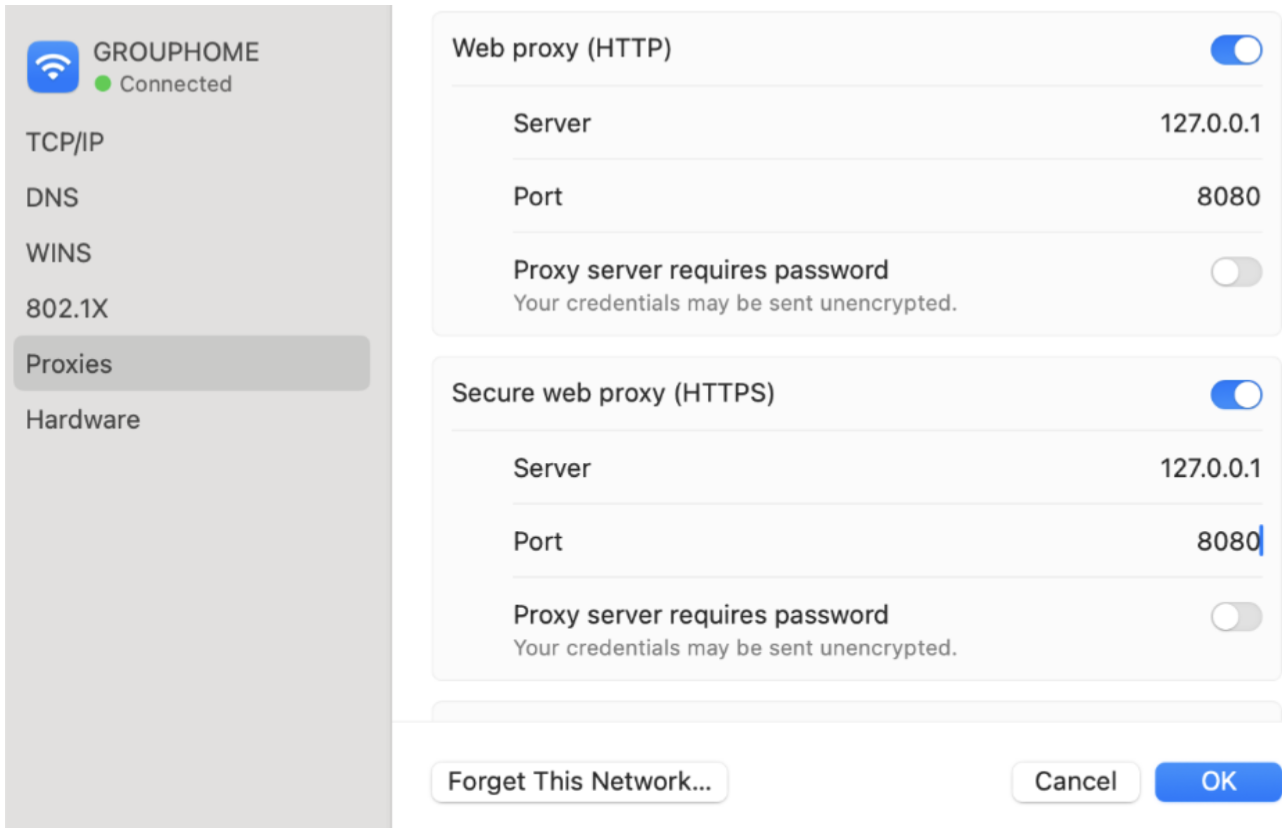

next, we need to configure granola to route through our proxy. set your network proxy settings to point to 127.0.0.1:8080 (mitmproxy's default), then launch granola.

on mac, you can do this in your system settings by going to Wi-Fi > Details.. > and setting your proxy settings to this:

reverse-engineering the api

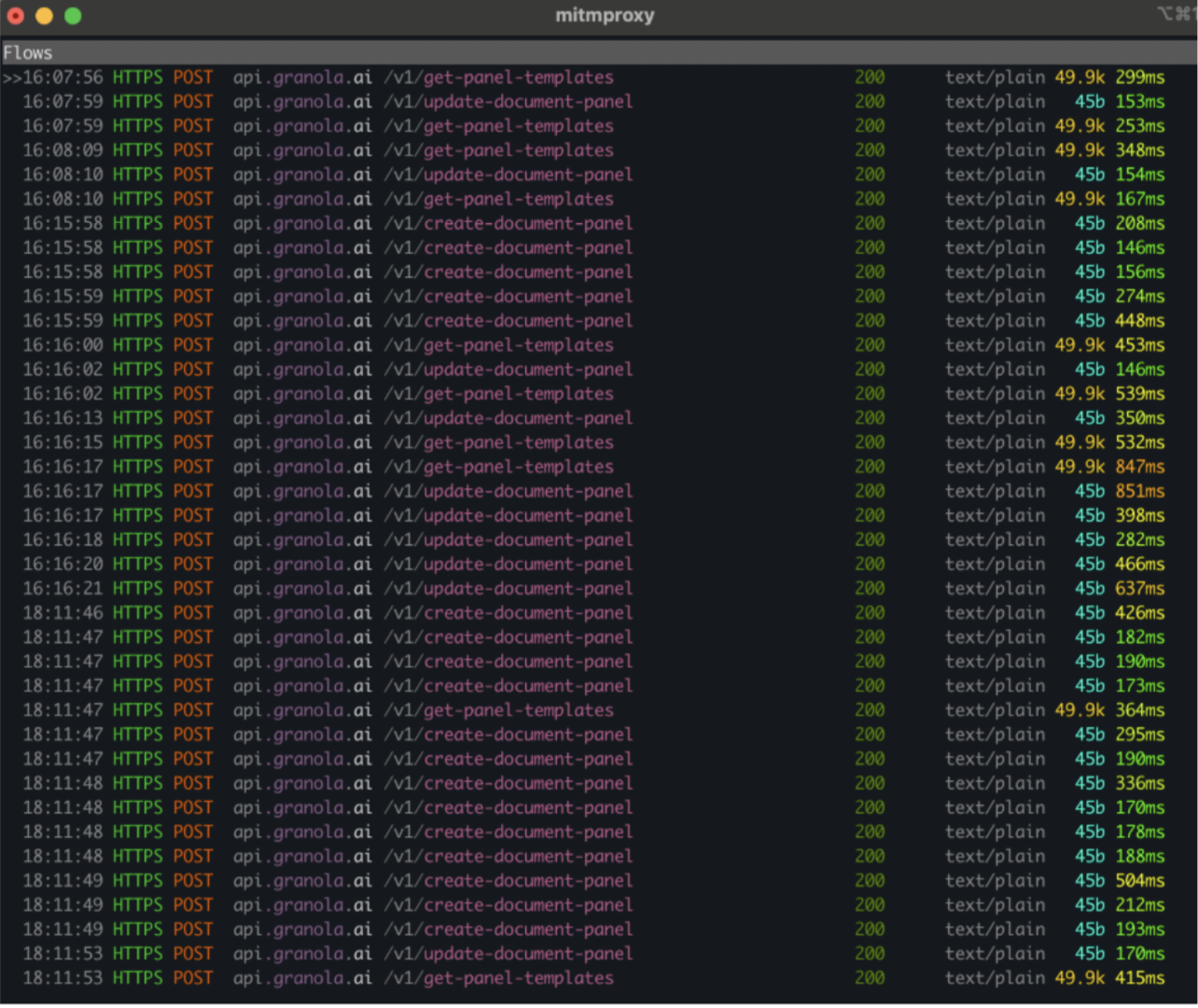

in mitmproxy, there's a lot going on. you can make your life easier by making use the filter tool by pressing f and filtering for api.granola.ai

investigation

- /v1/get-document-set returns a json with the ids of all the documents you own

- /v1/get-document-metadata fetches the title of our document

- it seems that documents are not stored in one blob, and instead are an aggregation of "panels", which i believe are the various h1 chunks you see in the actual note

- the request body of /v1/update-document-panel and /v1/create-document-panel contain the actual content of the document

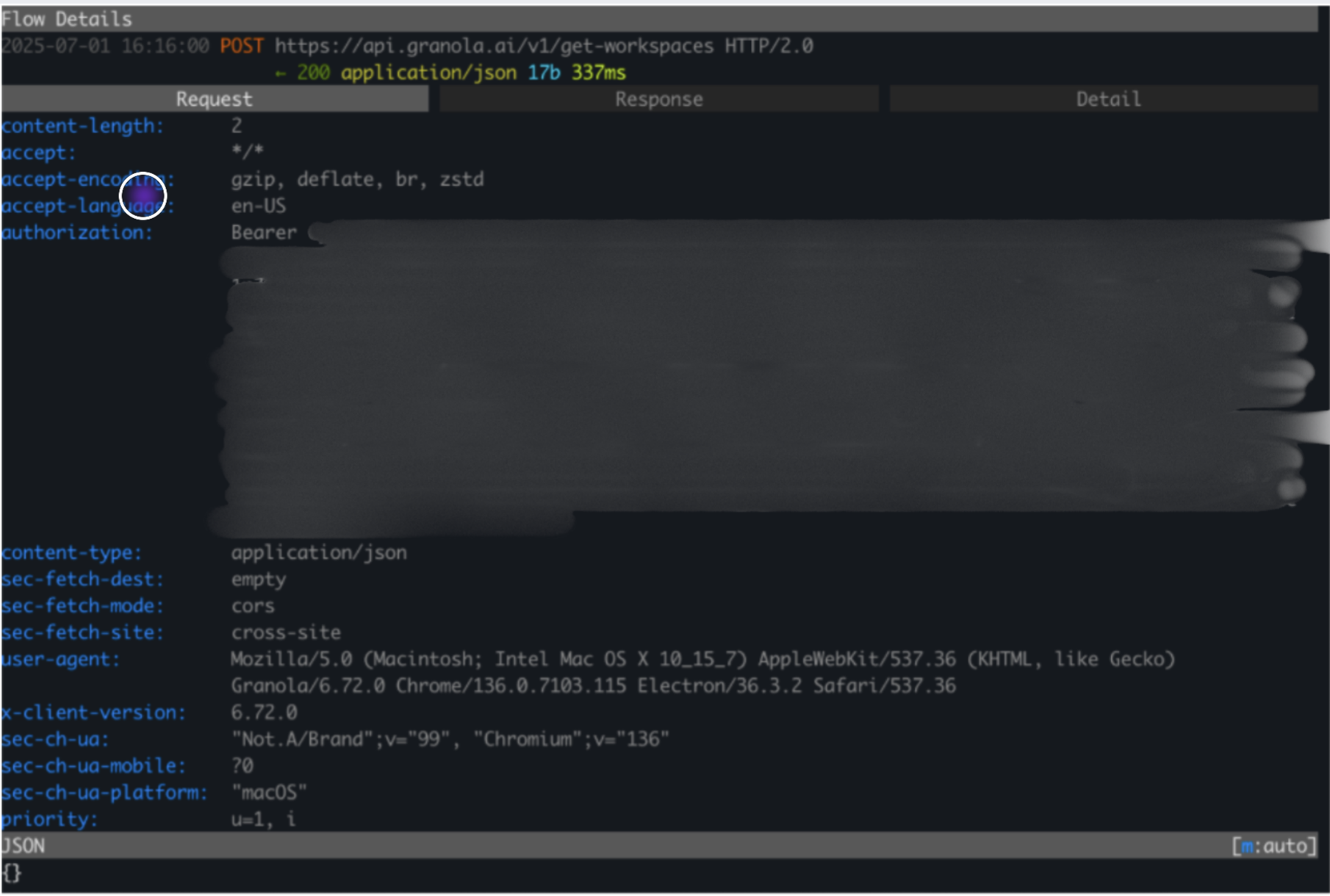

authorization

every request to api.granola.ai carries the same authorization header. i grabbed the bearer token and stored it in an .env file to be used later by our script.

building the exporter

after some investigation, i asked my best friend and close confidant mr. opus to write a python script to automate the export process. this is a working script at the time of this writing. also, granola seems to have a token refresh mechanism in place. my token became stale ~2 weeks later, so this is not a true true automation. however, feel free to use this as a starting point if the api schema changes down the line.

ps, as a chronically online information hoarder, i am always looking for better systems to consume, organize, and retrieve the many gigabytes of information i come across every day. if you have opinionated obsidian/note taking workflows, i'd like to chat :)

before running the script, create a python virtual environment & pip install requests

voila! happy information-hoarding! big shoutout to Joseph Thacker for inspiration

#!/usr/bin/env python3

"""

Export Granola meeting notes → Markdown files.

Requirements

------------

python -m pip install requests

Environment variable GRANOLA_BEARER_TOKEN must be set.

"""

import os

import sys

import logging

from pathlib import Path

import requests

# -----------------------------------------------------------------------------

# CONFIG

# -----------------------------------------------------------------------------

OUTPUT_DIR = Path.home() / "obsidian/granola" # change if you want

LIST_URL = "https://api.granola.ai/v1/get-document-set"

META_URL = "https://api.granola.ai/v1/get-document-metadata"

PANELS_URL = "https://api.granola.ai/v1/get-document-panels"

ALT_PANEL_URL = "https://api.granola.ai/v1/get-document"

LIST_PAGE_SIZE = 100 # Granola accepts 100 max

# -----------------------------------------------------------------------------

# HELPERS

# -----------------------------------------------------------------------------

log = logging.getLogger("granola_export")

logging.basicConfig(

level=logging.INFO,

format="%(asctime)s %(levelname)s %(message)s",

datefmt="%H:%M:%S"

)

def load_env_file():

"""Load environment variables from granola_config.env if it exists."""

env_file = Path(__file__).parent / "granola_config.env"

if env_file.exists():

log.info("Loading config from %s", env_file)

with open(env_file, 'r') as f:

for line in f:

line = line.strip()

if line and not line.startswith('#') and '=' in line:

key, value = line.split('=', 1)

os.environ[key] = value

log.info("Loaded environment variable: %s", key)

else:

log.warning("Config file not found: %s", env_file)

def bearer_token() -> str:

# Load config file first

load_env_file()

tok = os.getenv("GRANOLA_BEARER_TOKEN")

if not tok:

log.error("GRANOLA_BEARER_TOKEN environment variable is not set.")

sys.exit(1)

log.info("Using bearer token: %s...%s", tok[:20], tok[-10:])

return tok

HEADERS = {

"Content-Type": "application/json",

"Accept": "*/*",

"User-Agent": "Granola/6.72.0",

"X-Client-Version": "6.72.0",

"Authorization": f"Bearer {bearer_token()}"

}

def prosemirror_to_md(node):

"""Very small converter – enough for headings, paragraphs, bullet lists."""

if not isinstance(node, dict):

return ""

t = node.get("type")

if t == "text":

return node.get("text", "")

if t == "paragraph":

return "".join(prosemirror_to_md(c) for c in node.get("content", [])) + "\n\n"

if t == "heading":

level = node.get("attrs", {}).get("level", 1)

inner = "".join(prosemirror_to_md(c) for c in node.get("content", []))

return f"{'#'*level} {inner}\n\n"

if t == "bulletList":

lines = []

for li in node.get("content", []):

if li.get("type") == "listItem":

txt = "".join(prosemirror_to_md(c) for c in li.get("content", []))

lines.append(f"- {txt.strip()}")

return "\n".join(lines) + "\n\n"

# fall-through: recurse

return "".join(prosemirror_to_md(c) for c in node.get("content", []))

def sanitize_filename(s: str) -> str:

bad = '<>:"/\\|?*'

return "".join(c for c in s if c not in bad).replace(" ", "_") or "Untitled"

# -----------------------------------------------------------------------------

# MAIN FLOW

# -----------------------------------------------------------------------------

def fetch_doc_ids():

"""Paginate through /v1/get-document-set until no IDs left."""

ids = []

offset = 0

while True:

payload = {"limit": LIST_PAGE_SIZE, "offset": offset}

r = requests.post(LIST_URL, headers=HEADERS, json=payload, timeout=15)

r.raise_for_status()

page = r.json()

# The API returns documents as an object with IDs as keys

documents_obj = page.get("documents", {})

batch = list(documents_obj.keys()) if documents_obj else []

ids.extend(batch)

if len(batch) < LIST_PAGE_SIZE:

break

offset += LIST_PAGE_SIZE

return ids

def fetch_metadata(doc_id):

"""Returns metadata dict."""

r = requests.post(

META_URL,

headers=HEADERS,

json={"document_id": doc_id},

timeout=15

)

r.raise_for_status()

return r.json()

def ensure_output_dir():

OUTPUT_DIR.mkdir(parents=True, exist_ok=True)

log.info("Saving Markdown to %s", OUTPUT_DIR)

def try_fetch_content(doc_id):

"""Try multiple API endpoints to fetch document content."""

# Strategy 1: Try get-document-panels (plural)

try:

r = requests.post(

PANELS_URL,

headers=HEADERS,

json={"document_id": doc_id},

timeout=15

)

if r.status_code == 200:

panels_data = r.json()

if isinstance(panels_data, list) and panels_data:

return panels_data[0].get("content")

elif isinstance(panels_data, dict) and "panels" in panels_data:

panels = panels_data["panels"]

if panels:

return panels[0].get("content")

except Exception as e:

log.debug("get-document-panels failed: %s", e)

# Strategy 2: Try alternative get-document endpoint

try:

r = requests.post(

ALT_PANEL_URL,

headers=HEADERS,

json={"document_id": doc_id},

timeout=15

)

if r.status_code == 200:

return r.json().get("content")

except Exception as e:

log.debug("get-document failed: %s", e)

return None

def save_doc(meta, doc_id):

title = meta.get("title") or "Untitled Granola Note"

log.info("Processing: %s", title)

# Try to get content from metadata first, then from API

panel_content = meta.get("content") or try_fetch_content(doc_id)

if panel_content:

md_body = prosemirror_to_md(panel_content)

else:

log.warning("Could not retrieve content for %s", title)

md_body = f"# {title}\n\n*Content could not be retrieved from Granola API*\n\n"

fm = [

"---",

f"granola_id: {doc_id}",

f"title: \"{title.replace('\"', '\\\"')}\"",

]

for field in ("created_at", "updated_at"):

if meta.get(field):

fm.append(f"{field}: {meta[field]}")

fm.append("---\n")

final = "\n".join(fm) + md_body

fn = OUTPUT_DIR / f"{sanitize_filename(title)}.md"

fn.write_text(final, encoding="utf-8")

log.info("wrote %s", fn.name)

def main():

ensure_output_dir()

log.info("Fetching document IDs …")

ids = fetch_doc_ids()

log.info("Found %d docs", len(ids))

for doc_id in ids:

try:

meta = fetch_metadata(doc_id)

save_doc(meta, doc_id)

except Exception as e:

log.exception("doc %s failed: %s", doc_id, e)

if __name__ == "__main__":

main()